We’ve always thought that the marriage of AI for Mental Health would be the subject of a million dollar question, but at such a time the questions that seem to be troubling the world for the moment is++; how do we make sure the new digital tools are safe, effective and actually do what they are meant to be doing? This week, FDA set in stone the AI Mental Health Devices Regulation Advisory Committee – the first in the world – and the news made all professions in mental health, technology and patient advocacy applaude.

The Rise of AI Mental Health Devices

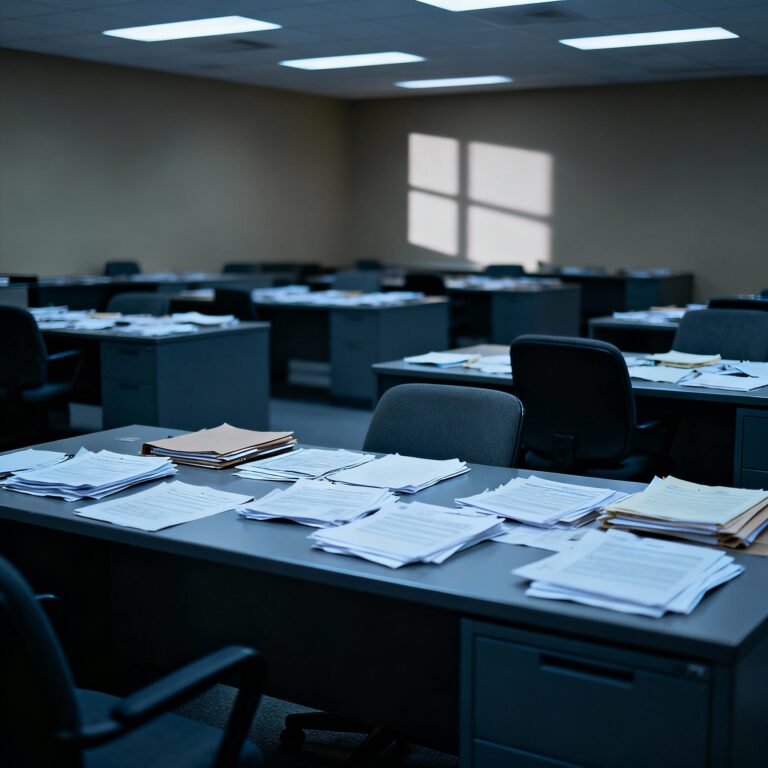

Screens that test for depression, AI virtual counseling bots, and anxiety management programs all focus on mental health and are becoming increasingly common. It is not unusual that people enjoy the effective – and private – services of these tools but there is a growing concern on the fact that there is some level of diagnoses and assessments that a machine cannot do.

What do we do with sensitive information? What do we do with the vulnerable populations—adolescents and the elderly? Industry executives regard regulation as the first step toward establishing public confidence. They hold that technology will never be able to substitute for the listening and the careful psychotherapy of trained mental health workers. However, the wish is to reach the untold millions of people who do not seek help because of cost, stigma, or distance.

Advocacy for the patient seems to be a contrarian in perspective and hope that the market is now subjected to FDA regulations, which will guarantee the effective and safe functioning of the mental health devices, rather than a marketplace dominated by mental health devices that lack FDA tests. The general thinking seems to be that the mental health care system is workable because of the innumerable AI facilities available, but not without responsibility, moral values, and essentials checks.

Why Do AI for Mental Health Apps Need Regulation?

User protection is paramount in the face of inaccurate guidance and privacy concerns. In a market full of digital tools, oversight guards against unsubstantiated claims and ensures real, evidence-based support for those who need it most.

The rise of AI for Mental Health tools has created a new era of accessibility in psychological care. From apps that screen for depression to AI-powered virtual counseling bots, the number of AI for Mental Health platforms has grown rapidly. While these tools provide privacy and convenience, they also raise questions about safety, data security, and ethical use.

The FDA’s creation of the AI Mental Health Devices Regulation Advisory Committee is the first major step in setting global standards. Regulators are focusing on how AI for Mental Health applications handle sensitive patient data, particularly when dealing with vulnerable populations like adolescents and older adults. Without clear rules, even the most advanced AI for Mental Health solutions can expose users to risks of misdiagnosis or misuse of personal information.

Industry leaders largely support this move, noting that AI for Mental Health products can never fully replace the listening, empathy, and expertise of trained clinicians. Yet, they also emphasize that responsible oversight is essential for building public trust. Millions of people who avoid therapy due to stigma, cost, or distance may benefit from regulated AI for Mental Health apps if they are proven safe and effective.

Frequently Asked Questions

Which devices does the FDA evaluate?

A: The FDA evaluates applications and other software functions like chatbots and other forms of interaction, digital wearable technology, and other internet-based utilities.

AI apps for mental health should be regulated for what reason?

The reason being your mental health matters. These apps don’t work with regulation, which is not controlled, could provide questionable mental health advices, allocate private user’s data thoughtlessly, or make unprovable claims. Regulation is about ensuring your mental health tools work and reliable, safe, and validated.

What tools does the FDA review?

It’s not only about expensive medical machines. The FDA reviews certain types of applications, chatbots, smart gadgets, and internet programs that assess, track, or remotely assist mental health care, particularly, those with embedded diagnostic or therapeutic sessions.

Is it possible for an AI to work in the place of a therapist or a psychiatrist?

Not exactly. AI is able to assist in mental health support by symptom tracking, scheduling, or reminder systems, and employing basic coping strategies. Beyond that, AI lacks the essential human touch, or the and sophisticated reason, that only a trained professional can provide.

Will this change the apps which I can download?

It is very possible. In the future, gaping standards might decrease the number of untested and misleading apps. The positive aspect is that the rest should provide better support for mental health issues.